Hello learners! Welcome to the next episode of Neural Networks. Today, we are learning about a neural network architecture named Vision Transformer, or ViT. It is specially designed for image classification. Neural networks have been the trending topic in deep learning in the last decade and it seems that the studies and application of these networks are going to continue because they are now used even in daily life. The role of neural network architecture in this regard is important.

In this session, we will start our study with the introduction of the Vision Transformer. We’ll see how it works and for this, we’ll see the step-by-step introduction of each point about the vision transformer. After that, we’ll move towards the difference between ViT and CNN and in the end, we’ll discuss the applications of vision transformers. If you want to know all of these then let’s start reading.

What is Vision Transformer Architecture?

The vision transformer is a type of neural network architecture that is designed for the field of image recognition. It is the latest achievement in deep learning and it has revolutionized image processing and recognition. This architecture has challenged the dominance of convolutional neural networks (CNN), which is a great success because we know that CNN has been the standard in image recognition systems.

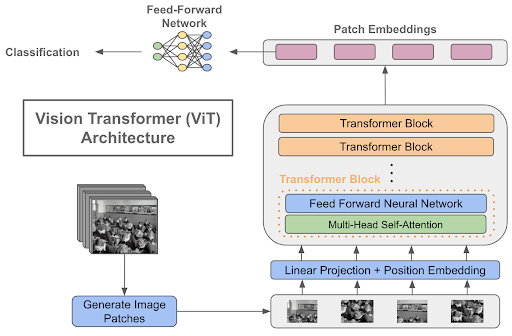

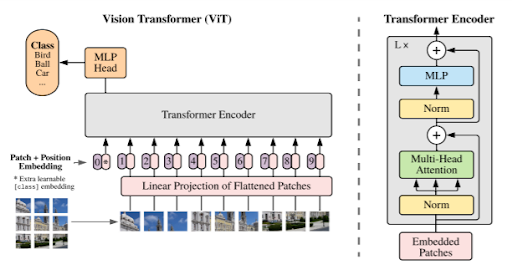

The ViT works in the following way:

It divides the images into patches of fixed-sizeEmploys the transformer-like architecture on them

Each patch is linearly embedded

Position embeddings are added to the patches

A sequence of vectors is created, which is then fed into the transformer encoder

We will talk more about how it works, but let’s look at how ViT was introduced in a market to understand its importance in image recognition.

Vision Transformer Publication

The vision transformer was introduced in a paper in 2020 titled “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale.” This paper was written by different researchers, including Alexey Dosovitskiy, Lucas Beyer, and Alexander Kolesnikov, and was presented at the conference on Neural Information Processing Systems (NeurIPS). This paper has different key concepts, including:

Image Tokenization

Transformer Encoder for Images

Positional Embeddings

Scalability

Comparison with CNNs

Pre-training and Fine-tuning

Some of these features will be discussed in this article.

Features of Vision Transformer Architecture

The vision transformer is one of the latest architectures but it has dominated other techniques because of its remarkable performance. Here are some features that make it unique, among others:

Transformer Architecture in ViT

ViT uses the transform architecture for the implementation of its work. We know that transformer architecture is based on the self-attention mechanism; therefore, it can capture information about the different parts of the sequence input. The basic working of Vi is to divide the images into patches, so after that, the transformer architecture helps to get the information from different patches of the image.

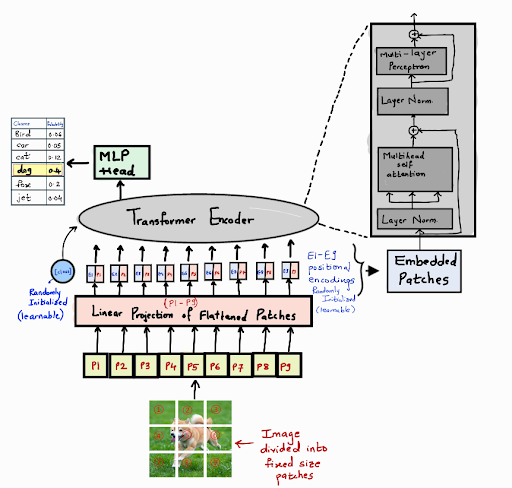

Classification Token in ViT

This is an important feature of ViT that allows it to extract and represent global information effectively. This information is extracted from the patches made during the implementation of ViT.

The classification token is considered a placeholder in the whole sequence created through the patch embeddings. The main purpose of the classification token is to act as the central point of all the patches. Here, the information from these patches is connected in the form of a single vector of the image.

The classification token is used with the sel-attention mechanism in the transformer encoder. This is the point where each patch interacts with the classification token and as a result, it gathers information about the image.

The classification token helps in the gathering of the final image after getting the information from the encoder layers.

Training of the Large Datasets

The vision transformer architecture has the ability to train large datasets, which makes it more useful and efficient. The ViT is pre-trained on large sets such as ImageNet, which helps it learn from the general features of the images. Once it is fully trained, the training process using the small dataset is performed on it to get it working on the targeted domains.

Scalability in ViT

One of the best features of ViT is its scalability, which makes it a perfect choice for image recognition. When the resolution of the images increases during the training process, the architecture does not change. The ViT has the working mechanisms to work in such scenarios. This makes it possible to work on high-resolution images and provide fine-grained information about them.

Working on the Vision Transformer Architecture

Now that we know the basic terms and working style of vision transformers, we can move forward with the step-by-step process of how vision transform architecture works. Here are these steps:

Image Tokenisation in ViT

The first step in the vision transformer is to get the input image and divide it into non-overlapping patches of a fixed size. This is called image tokenization and here, each patch is called a token. When reconnected together, these patches can create the original input image. This step provides the basis for the next steps.

Linear Embedding in ViT

Till now, the information in the ViT is in pictorial format. Now, each patch is embedded with a vector to convert the information into a transformer-compatible format. This helps with smooth and effective working.

Positional Embedding in ViT

The next step is to assign the patches all spatial information and for this, positional embeddings are required. These are added to the token embeddings and help the model understand the position of all the patches of images.

These embeddings are an important part of ViT because, in this case, the spatial relationship among the image pixels is not inherently present. This step allows the model to understand the detailed information in the input.

Transformer Encoding in ViT

Once the above steps are complete, the tokenized and embedded image patches are then passed to the transformer encoder for processing. It consists of multiple layers and each of them has a self-attention mechanism and feed forward neural network.

Here, the self-attention mechanism is able to capture the relationship between the different parts of the input. As a result, it takes the following features into consideration:

The global context of the image

Long dependencies of the image

Working of Classification Head in ViT

As we have discussed before, the classification head has information on all the patches. It is a central point that gets information from all other parts and it represents the entire image. This information is fed into the linear classifier to get the class labels. At the end of this step, the information from all the parts of the image is now present for further action.

Training Process of ViT

The vision transformers are pre-trained on large data sets, which not only makes the training process easy but also more efficient. Here are two phases of training for ViT:

The pre-training process is where large datasets are used. Here, the model learns the basic features of the images.

The fine-tuning process in which the small and related dataset is used to train the model on the specific features.

Attention to the Global context

This step also involves the self-attention mechanism. Here, the model is now able to get all the information about the relationship among the token pairs of the images. In this way, it better captures the long dependencies and gets information about the global context.

All these steps are important in the process and the training process is incomplete without any of them.

Difference Between ViT and CNN

The importance and features of the vision transformer can be understood by comparing it with the convolutional neural network. CNNs are one of the most effective and useful neural networks for image recognition and related tasks but with the introduction of a vision transformer, CNNs are considered less useful. Here are the key differences between these two:

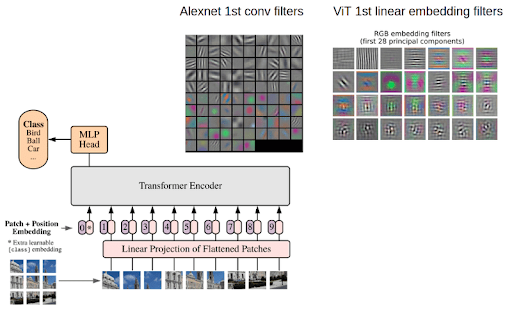

Feature Extraction

The core difference between ViT and CNN is the way they adopt feature extraction. The ViT utilizes the self-attention mechanism for feature extraction. This helps it identify long-range dependencies. Here, the relationship between the patches is understood more efficiently and information on the global context is also known in a better way.

In CNN, feature extraction is done with the help of convolutional filters. These filters are applied to the small overlapping regions of the images and local features are successfully extracted. All the local textures and patterns are obtained in this way.

Architecture of the Model

The ViT uses a transformer-based architecture, which is similar to natural language processing. As mentioned before, the ViT has the following:

Encoder with multiple self-attention layers and a final classifier head. These multiple layers allow the ViT to provide better performance.

CNN uses a feed-forward architecture and the main components of the networks are:

Convolutional layers

Pooling layers

Activation functions

Strength of Networks

Both of these have some important points that must be kept in mind when choosing them. Here are the positive points of both of these:

The ViT has the following features that make it useful:

Vit can handle global context effectively

It is less sensitive to image size and resolution

It is efficient for parallel processing, making it fast

CNN, on the other hand, has some features that ViT lacks, such as:

It learns local features efficiently

It has the explicit nature of filters so it shows Interpretability

It is well-established and computationally efficient

So all these were the basic differences, the following table will allow you to compare both of these side by side:

Feature |

Convolutional Neural Network |

Vision Transformer |

Feature Extraction |

Convolutional filters |

Self-attention mechanism |

Architecture |

Feedforward |

Transformer-based |

Strengths |

Local features Interpretability Computational efficiency |

Global context Less sensitive to image size Parallel processing |

Weaknesses |

Long-range dependencies Image size and resolution Filter design |

More computational resources' interpretability Small images |

Applications |

Image classification Object detection Image recognition Video recognition Medical imaging |

Image classification Object detection Image segmentation |

Current Trends |

N/A |

Increasing popularity ViT and CNN combinations Interpretability and efficiency improvements |

Recent Trends in Vision Transformer

The introduction of the ViT is not old and it has already been implemented in different fields. Here is the overview of some applications of the ViT where it is currently used:

Image Classification

The most common and prominent use of ViT is in image classification. It has provided remarkable performance with datasets like ImageNet and CIFAR-100. The vision transformer has classified the images into different groups that provide the user with a guarantee of their best performance.

Object Detection

The pre-training process of the vision transformer has allowed it to perform object detection in the images. This network is trained specially to detect objects from large datasets. It does it with the help of an additional detection head that makes it able to predict bounding boxes and confidence scores for the required objects from the images.

Image Segmentation with ViT

The images can be classified into different groups using the vision transformer. It provides a pixel-level prediction that allows it to make decisions in great detail. This makes it suitable for applications such as medical imaging and autonomous driving.

Generative Mdoeling with ViT

The vision transformer is used for the generation of realistic images using the existing data sets. This is useful for applications such as image editing, content creation, artistic exploration, etc.

Hence, we have read a lot about the vision transformer neural network architecture. We have started with the basic introduction, where we see the core concepts and the flow of the vision transformer’s work. After that, we saw the details of the steps that are used in ViT and then we compared it with CNN to understand why it is considered better than CNN in many aspects. In the end, we have seen the applications of ViT to understand its scope. I hope you liked the content and if you are confused at any point, you can ask in the comment section.

Deep Learning

Deep Learning xeohacker

xeohacker 0 Comments

0 Comments