Hey learners! Welcome to the new tutorial on deep learning, where we are going deep into the learning of the best platform for deep learning, which is TensorFlow. Let me give you a reminder that we have studied the need for libraries of deep learning. There are several that work well when we want to work with amazing deep-learning procedures. In today’s lecture, you are going to know the exact reasons why we chose TensorFlow for our tutorial. Yet, first of all, it is better to present the list of topics that you will learn today:

Why do we use TensorFlow with deep learning?

What are some helpful features of this library?

How can you understand the mechanism of TensorFlow?

Show the light towards the architecture, and components of the TensorFlow.

In how many phases you can complete the work in the TensorFlow and what are the details of each step?

How the data is represented in the TensorFlow?

Why TensorFlow for Deep Learning

In this era of technology, where artificial intelligence has taken charge of many industries, there is a high demand for platforms that, with the help of their fantastic features, can make deep learning easier and more effective. We have seen many libraries for deep learning and tested them personally. As a result of our research, we found TensorFlow the best among them according to the requirements of this course.

There are many reasons behind this choice that we have already discussed in our previous sessions but here, for a reminder, here is a small summary of the features of TensorFlow

Flexibility

Easy to train

Ability to train the parallel neural network training

Modular nature

Best match with the Python programming language

As we have chosen Python for the training process, therefore, we are comfortable with the TensorFlow. It also works with traditional machine learning and has the specialty of solving complex numerical computations easily without the requirement of minor details. TensorFlow proved itself one of the best ways to learn deep learning, therefore, google open-sourced it for all types of users, especially for students and learners.

Helpful Features of TensorFlow

The features that we have discussed before we very generalized and you must know more about the specific features that are important to know before getting started with TensorFlow.

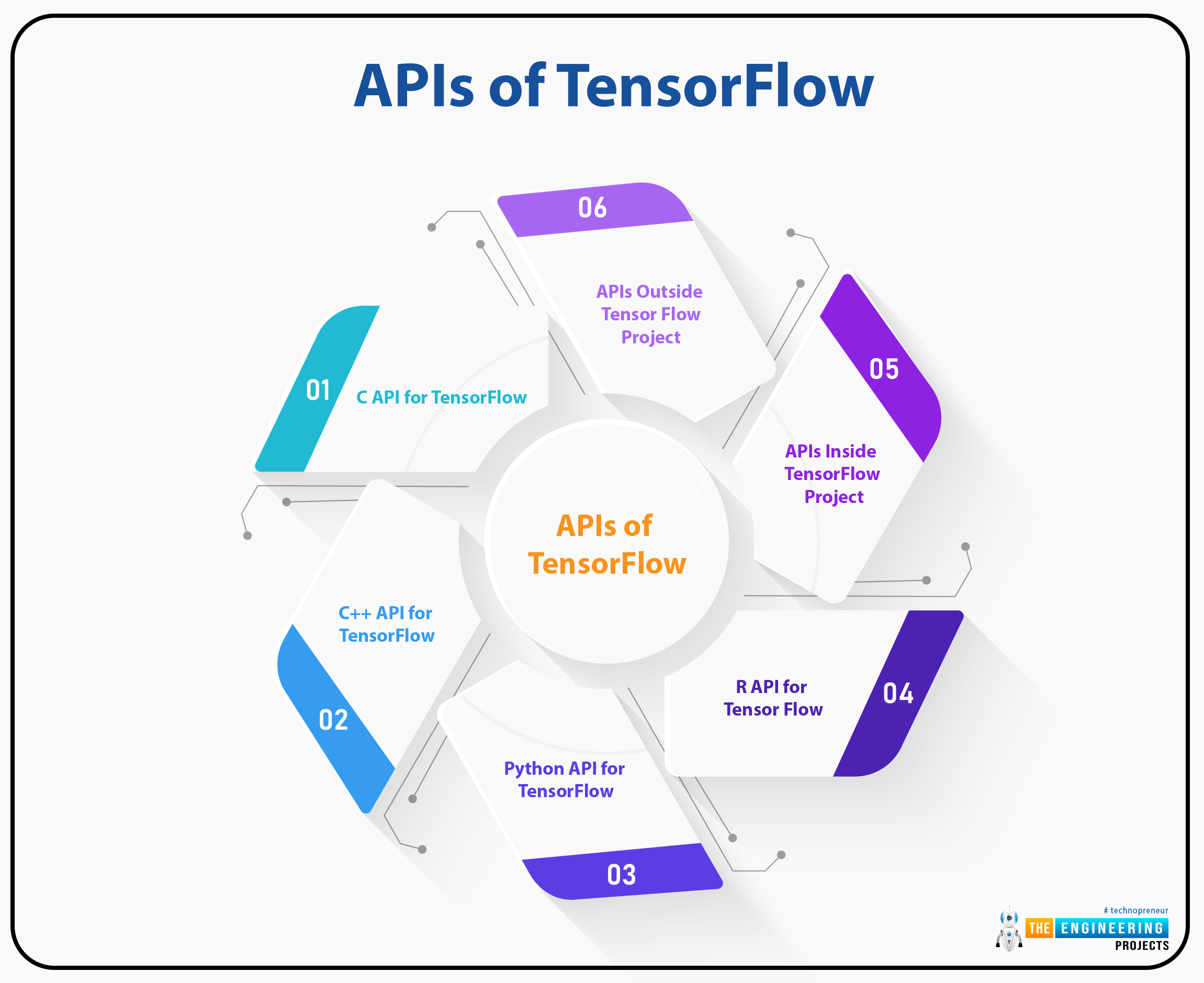

APIs of TensorFlow

Before you start to take an interest in any software or library, you must have knowledge about the specific programming languages in which you operate that library. Not all programmers are experts in all coding languages; therefore, they go with the specific libraries of their matching APIs. TensorFlow can be operated in two programming languages via APIs:

C++

Python

Java (Integration)

R (Integration)

The reason we love TensorFlow is that the coding mechanism for deep learning is much more complicated. It is a difficult job to learn and then work with these coding mechanisms.

TensorFlow provides the APIs in comparatively simple and easy-to-understand programming languages. So, with the help of C++ or Python, you can do the following jobs in TensorFlow:

To configure the neuron

Work with the neuron

Prepare the neural network

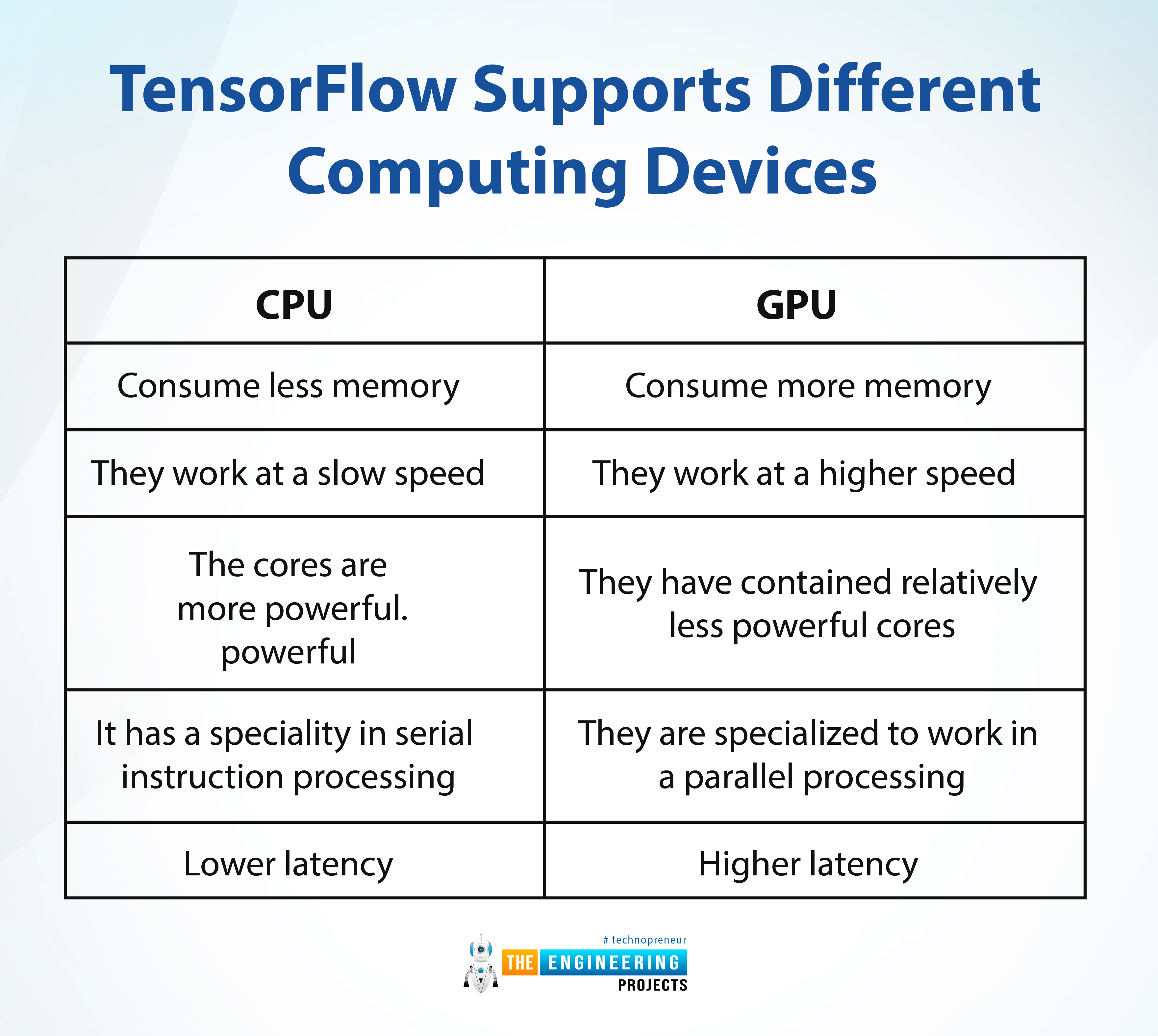

TensorFlow Supports Different Computing Devices

As we have said multiple times, deep learning is a complex field with applications in several forms. The training process of the neural network with deep learning is not a piece of the cake. The training process of neural networks requires a lot of patience. The computations, multiplication of the matrices, complex calculations of mathematical functions, and much more require a lot of time to be consumed, even after experience and perfect preparations. At this point, you must know clearly about two types of processing units:

Central processing unit

Graphical processing unit

The central processing units are the normal computer units that we use in our daily lives. We've all heard of them. There are several types of CPUs, but we'll start with the most basic to highlight the differences between the other types of processing units. The GPUs, on the other hand, are better than the CPUs. Here is a comparison between these two:

CPU |

GPU |

Consume less memory |

Consume more memory |

They work at a slow speed |

They work at a higher speed |

The cores are more powerful.powerful |

They have contained relatively less powerful cores |

It has a specialty in serial instruction processing |

They are specialized to work in a parallel processing |

Lower latency |

Higher latency |

The good thing about TensorFlow is, it can work with both of them, and the main purpose behind mentioning the difference between CPU and GPU was to tell you about the perfect match for the type of neural network you are using. TensorFlow can work with both of these to make deep learning algorithms. The feature of working with the GPU makes it better for compilation than other libraries such as Torch and Keras.

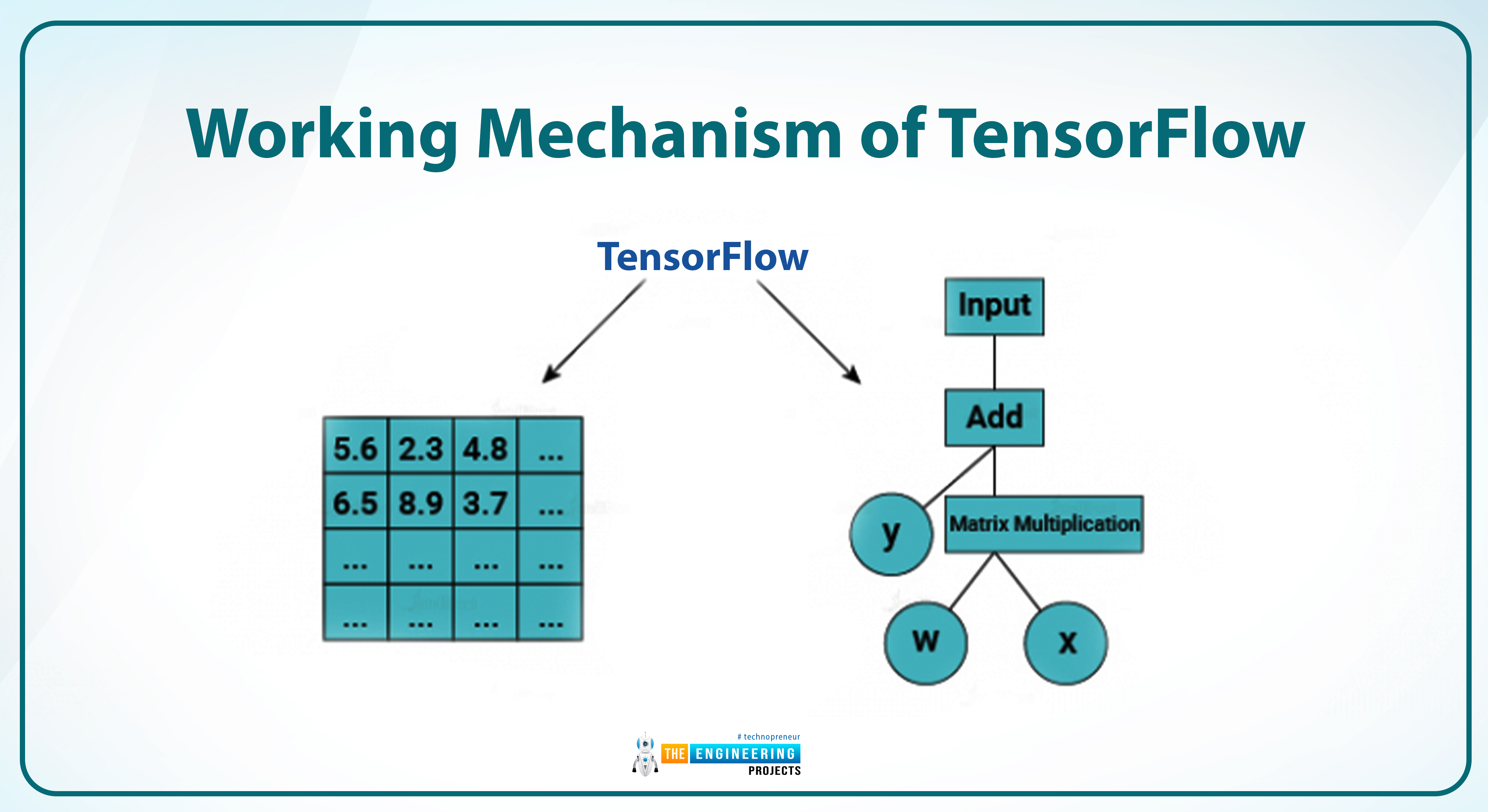

Working Mechanism of TensorFlow

It is interesting to note that Python has made the workings of TensorFlow easier and more efficient. This easy-to-learn programming language has made high-level abstraction easier. It makes the working relationship between the nodes and tensors more efficient.

The versatility of TensorFlow makes the work easy and effective. TensorFlow modules can be used in a variety of applications, including

Android apps

iOS

Cluster

Local machines

Hence, you can run the modules on different types of devices, and there is no need to design or develop the same application for different devices.

The history of deep learning is not unknown to us. We have seen the relationship between artificial intelligence and machine learning. Usually, the libraries are limited to specific fields, and for all of them, you have to install and learn different types of software. But TensorFlow makes your work easy, and in this way, you can run conventional neural networks and the fields of AI, ML, and deep learning on the same library if you want.

The Architecture of TensorFlow

The architecture of the TensorFlow depends upon the working of the library. You can divide the whole architecture into the three main parts given next:

Data Processing

Model Building

Training of the data

The data processing involves structuring the data in a uniform manner to perform different operations on it. In this way, it becomes easy to group the data under one limiting value. The data is then fed into different levels of models to make the work clear and clean.

In the third part, you will see that the models created are now ready to be trained, and this training process is done in different phases depending on the complexity of the project.

Phases of the TensorFlow Projects

While you are running your project on TensorFlow, you will be required to pass it through different phases. The details of each phase will be discussed in the coming lectures, but for now, you must have an overview of each phase to understand the information shared with you.

Development Phase:

The development phase is done on the PC or other types of a computer when the models are trained in different ways. The neural networks vary in the number of layers, and in return, the development phase also depends upon the complexity of the model.

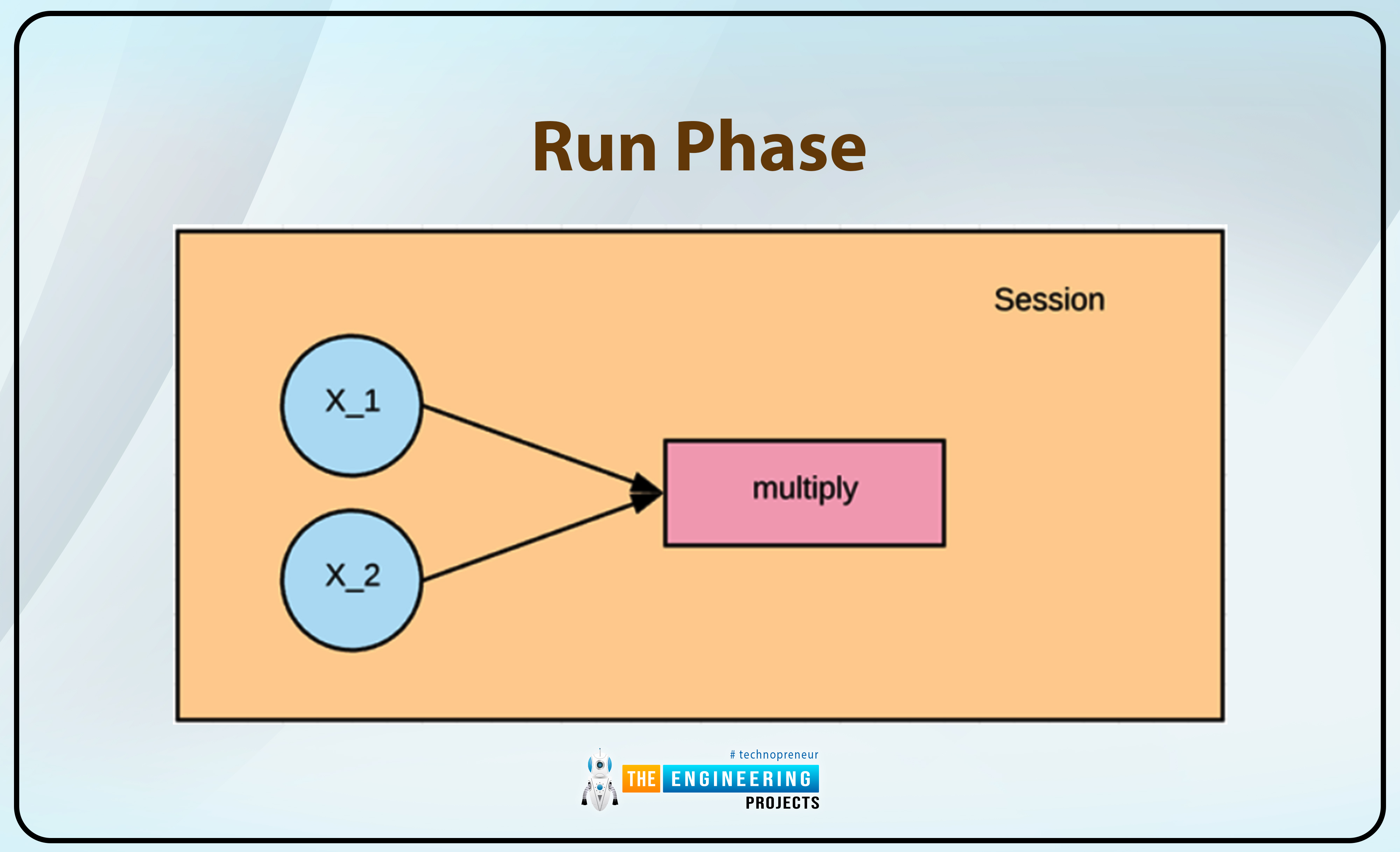

Run Phase

The run phase is also sometimes referred to as the inference phase. In this phase, you will test the training results or the models by running them on different machines. There are multiple options for a user to run the model for this purpose. One of them is the desktop, which may contain any operating system, whether it is Windows, macOS, or Linux. No matter which of the options you choose, it does not affect the running procedure.

Moreover, the ability of TensorFlow to be run on the CPU and GPU helps you test your model according to your resources. People usually prefer GPU because it produces better results in less time; however, if you don't have a GPU, you can do the same task with a CPU, which is obviously slower; however, people who are just getting started with deep learning training prefer CPU because it avoids complexities and is less expensive.

Components in TensorFlow

Finally, we are at the part where we can learn a lot about the TensorFlow components. In this part, you are going to learn some very basic but important definitions of the components that work magically in the TensorFlow library.

Tensor

Have you ever considered the significance of this library's name? If not, then think again, because the process of performance is hidden in the name of this library. The tensor is defined as:

"A tensor in TensorFlow is the vector or the n-dimensional data matrix that is used to transfer data from one place to another during TensorFlow procedures."

The tensor may be formed as a result of the computation during these procedures. You must also know that these tensors contain identical datatypes, and the number of dimensions in these matrices is known as the shape.

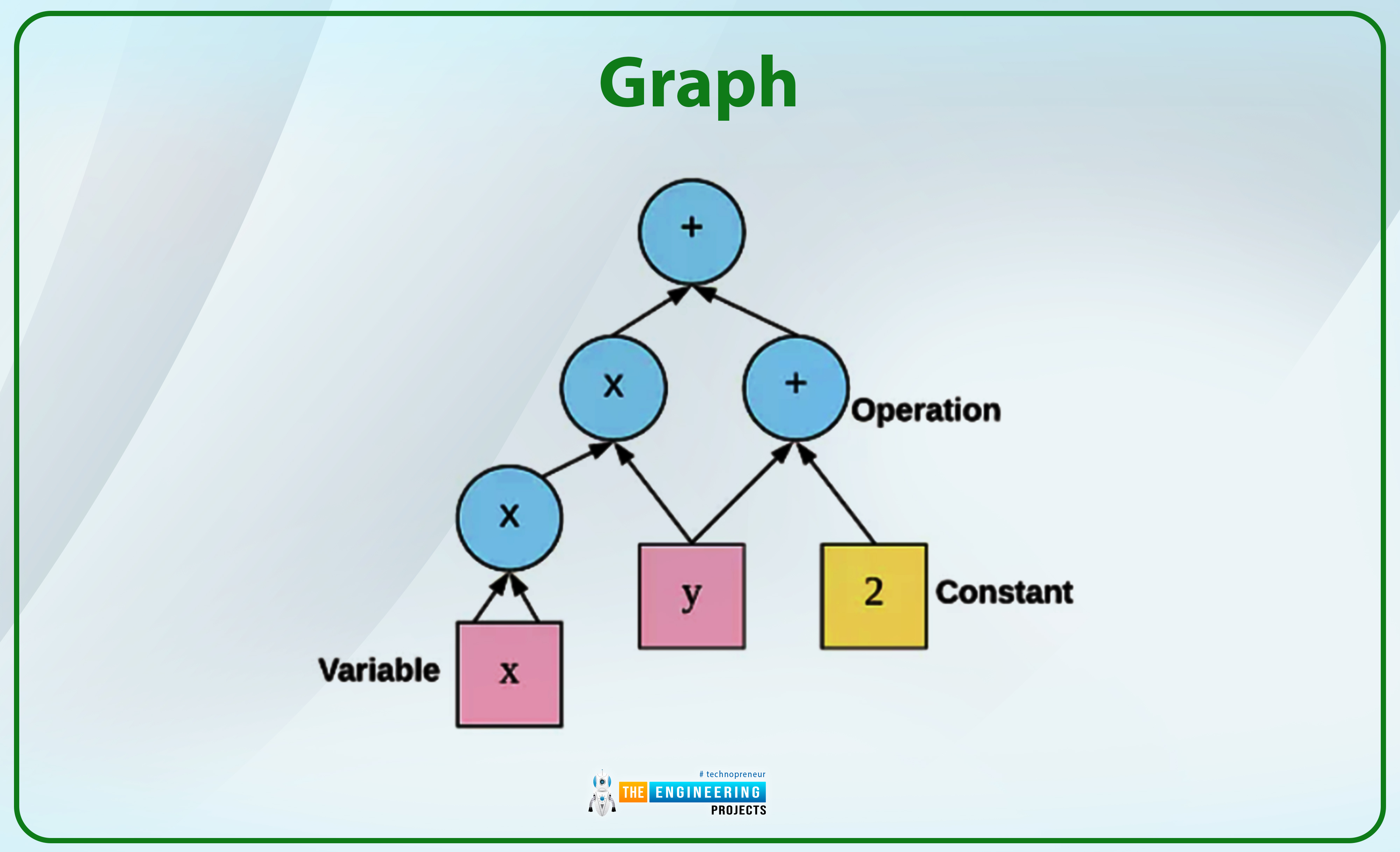

Graph

During the process of training, the operations taking place in the network are called graphs. These operations are connected with each other, and individually, you can call them "ops nodes." The point to notice here is that the graphs do not show the value of the data fed into them; they just show the connections between the nodes. There are certain reasons why I found the graphs useful. Some of them are written next:

These can be run or tested on any type of device or operating system. You have the versatility to run them on the GPU, OS, or mobile devices according to your resources.

The graphs can be saved for future use if you do not want to use them at the current time or want to reuse them in the future for other projects or for the same project at any other time, just like a simple file or folder. This portable nature allows different people sharing the same project to use the same graph without having any issues.

Dataflow Graphs in TensorFlow

TensorFlow works differently than other programming languages because the flow of data is in the form of nodes. In traditional programming languages, code is executed in the form of a sequence, but we have observed that in TensorFlow, the data is executed in the form of different sessions. When the graph is created, no code is executed; it is just saved in its place. The only way to execute the data is to create the session. You will see this in action in our coming lectures.

Each node in the TensorFlow graph, the mathematical operation such as addition, subtraction, multiplication, etc, is represented as the node. By the same token, the multidimensional arrays (or tensors) are shown by the nodes.

In the memory of TensorFlow, the graph of programming languages is known as a "computational graph."

With the help of CPUs and GPUs, large-scale neural networks are easy to create and use in TensorFlow.

By default, a graph is made when you start the Tensorflow object. When you move forward, you can create your own graphs that work according to your requirements. These external data sets are fed into the graph in the form of placeholders, variables, and constants. Once these graphs are made and you want to run your project, the CPUs and GPUs of TensorFlow make it easy to run and execute efficiently.

Hence, the discussion of TensorFlow is ended here. We have read a lot about TensorFlow today and we hope it is enough for you to understand the importance of TensorFlow and to know why we have chosen TensorFlow among the several options. In the beginning, we have read what is TensorFlow and what are some helpful features of TensorFlow. In addition to this, we have seen some important APIs and programming languages of this library. Moreover, the working mechanism and the architecture of TensorFlow were discussed here in addition to the phases and components. We hope you found this article useful, stay with us for more tutorials.

Deep Learning

Deep Learning ayeshayounas

ayeshayounas 0 Comments

0 Comments

2.3k

2.3k

953

953

921

921

2.1K

2.1K