Hello pupils! Welcome to the following lecture on deep learning. As we move forward, we are learning about many of the latest and trendiest tools and techniques, and this course is becoming more interesting. In the previous lecture, you saw some important frameworks in deep learning, and this time, I am here to introduce you to some fantastic algorithms of deep learning that are not only important to understand before going into the practical implementation of the deep learning frameworks but are also interesting to understand the applications of deep learning and related fields. So, get ready to learn the magical algorithms that are making deep learning so effective and cool. Yet before going into details, let me discuss the questions for which we are trying to find answers.

How does deep learning algorithms are introduced?

What is the working of deep learning algorithms?

What are some types of DL algorithms?

How do deep learning algorithms work?

How these algorithms are different from each other?

Deep learning plays an important role in the recognition of objects and therefore, people use this feature in image, video and voice recognition where the objects are not only detected but can be changed, removed, edited, or altered using different techniques. The purpose to discuss these algorithms with you is, to have more and more knowledge and practice to choose the perfect algorithm for you and to have the concept of the efficiency and working of each algorithm. Moreover, we will discuss the application to provide you with the idea to make new projects by merging two or more algorithms together or creating your own algorithm.

What is a Deep Learning Algorithm?

Throughout this course, you are learning that with the help of the implementation of deep learning, computers are trained in such a way that they can take human-like decisions and can have the ability to act like humans with the help of their own intelligence. Yet, it is time to learn about how they are doing this and what the core reason is behind the success of these intelligent computers.

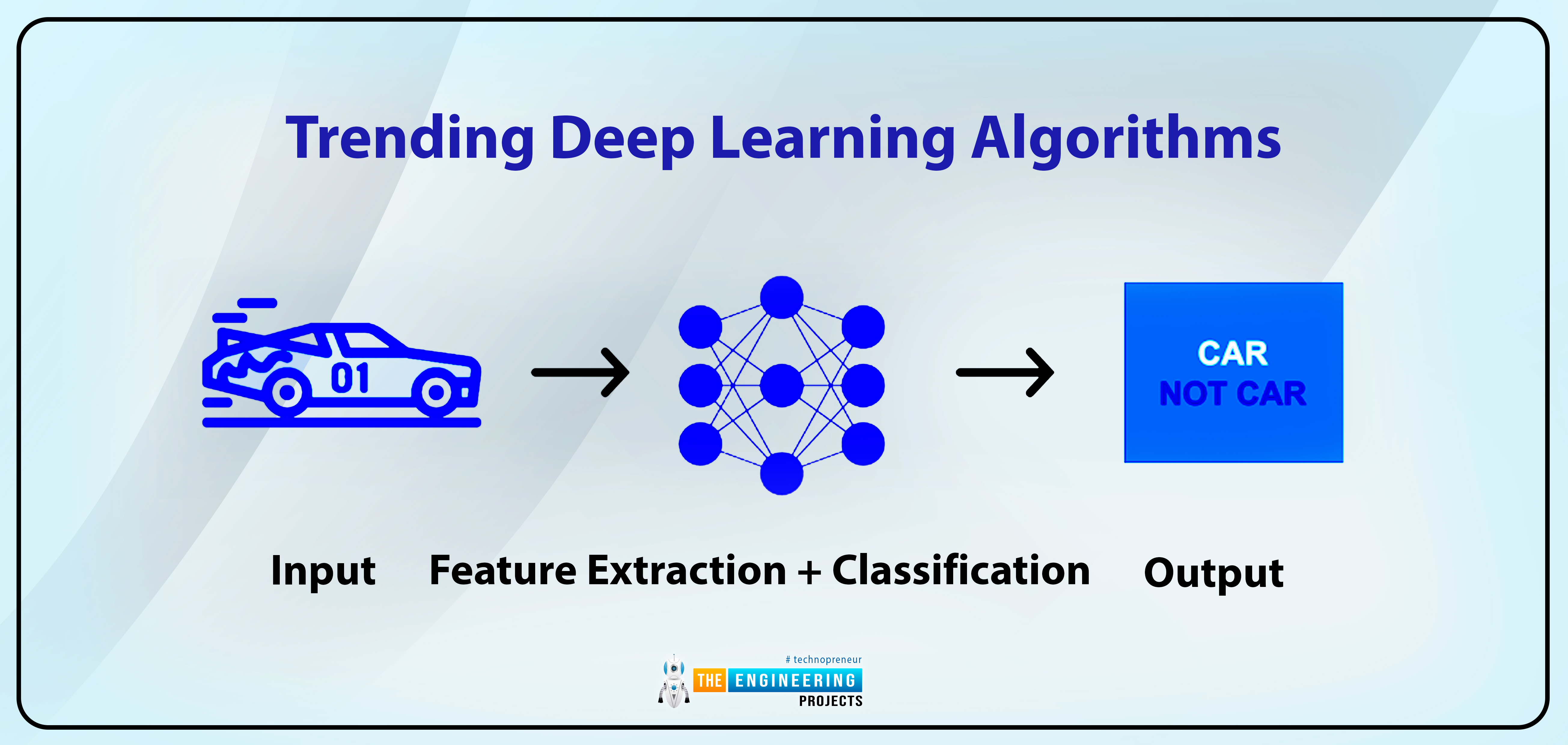

First of all, keep in mind that deep learning is done in different layers, and these layers are run with the help of the algorithm. We introduce the deep learning algorithm as:

“Deep learning algorithms are the set of instructions that are designed dynamically to run on the several layers of neural networks (depending upon the complexity of the neural networks) and are responsible for running all the data on the pre-trained decision-making neural networks.”

One must know that, usually, in machine learning, there is tough training to work with complex datasets that have hundreds of columns or features. This becomes difficult with the classic deep learning algorithm, so the developers are constantly designing a more powerful algorithm with the help of experimentation and research.

How Does Deep Learning Algorithm Work?

When people are using different types of neural networks with the help of deep learning, they have to learn several algorithms to understand the working of each layer of the algorithm. Basically, these algorithms depend upon the ANNs (artificial neural networks) that follow the principles of human brains to train the network.

While the training of the neural network is carried out, these algorithms take the unknown data as input and use it for the following purposes:

To group the objects

To extract the required features

To find out the usage patterns of data

The basic purpose of these algorithms is to build different types of models. There are several algorithms for neural networks, and it is considered that no algorithm is perfect for all types of tasks. All of them have their own pros and cons, and to have mastery over the deep learning algorithm, you have to study more and more and test several algorithms in different ways.

Types of Deep Learning Algorithms

Do you remember that in the previous lectures we discussed the types of deep learning networks? Now you will observe that, while discussing the deep learning algorithms, you will utilize your concepts of neural networks. With the advancement of deep learning concepts, several algorithms are being introduced every year. So, have a look at the list of algorithms.

Convolutional Neural Networks (CNNs)

Long Short-Term Memory Networks (LSTMs)

Deep Belief Networks (DBNs)

Generative Adversarial Networks (GANs)

Autoencoders

Radial Basis Function Networks (RBFNs)

Multilayer Perceptrons (MLPs)

Restricted Boltzmann Machines( RBMs)

Recurrent Neural Networks (RNNs)

Self-Organizing Maps (SOMs)

Do not worry because we are not going to discuss all of them at a time but will discuss only the important ones to give you an overview of the networks.

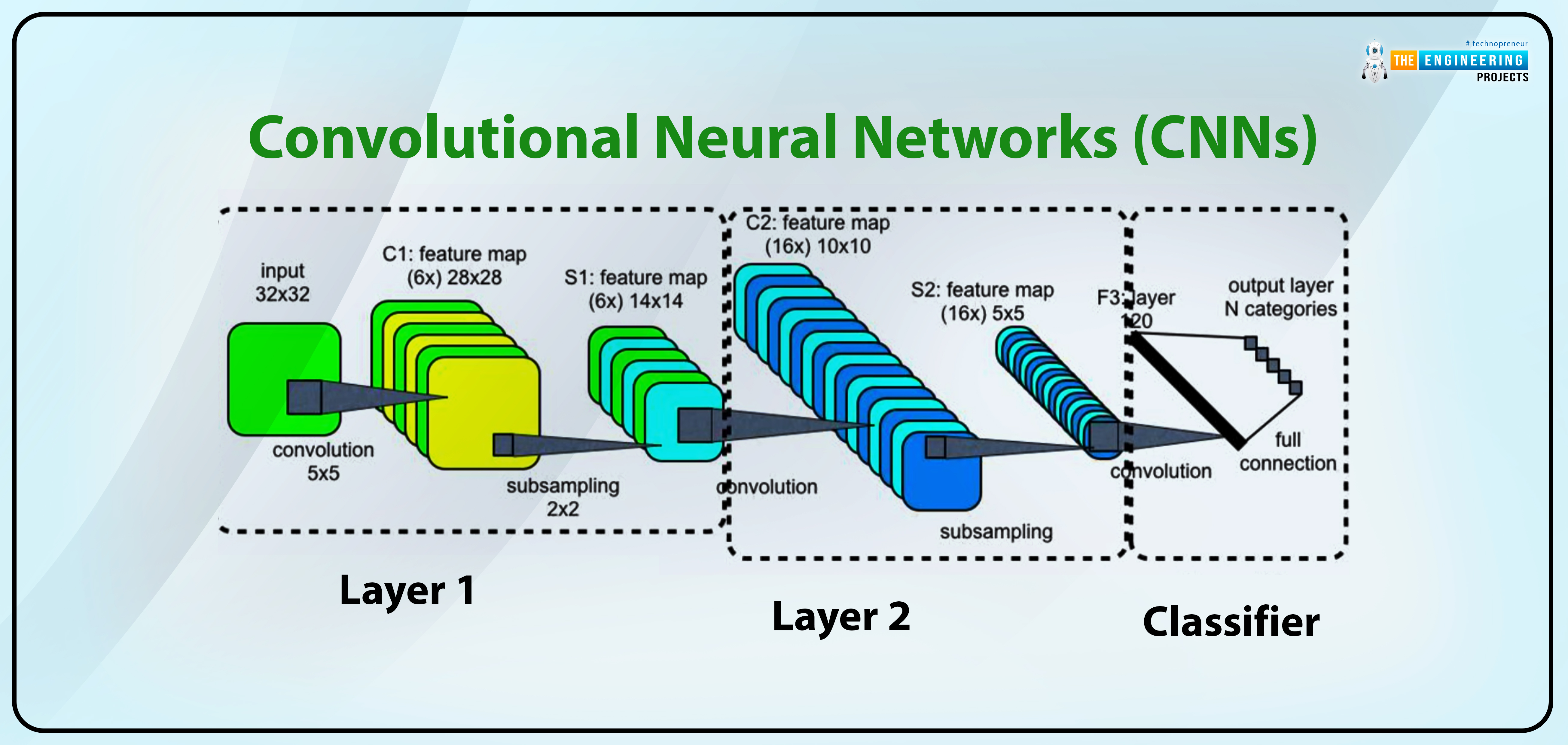

Convolutional Neural Networks (CNNs)

Convolutional neural networks are also known as "ConvNets," and the main applications of these networks are in image processing and related fields. If we look back at its history, we find that it was first introduced in 1998. Yan LeCun initially referred to it as LeNet. At that time, it was introduced to recognize ZIP codes and other such characters.

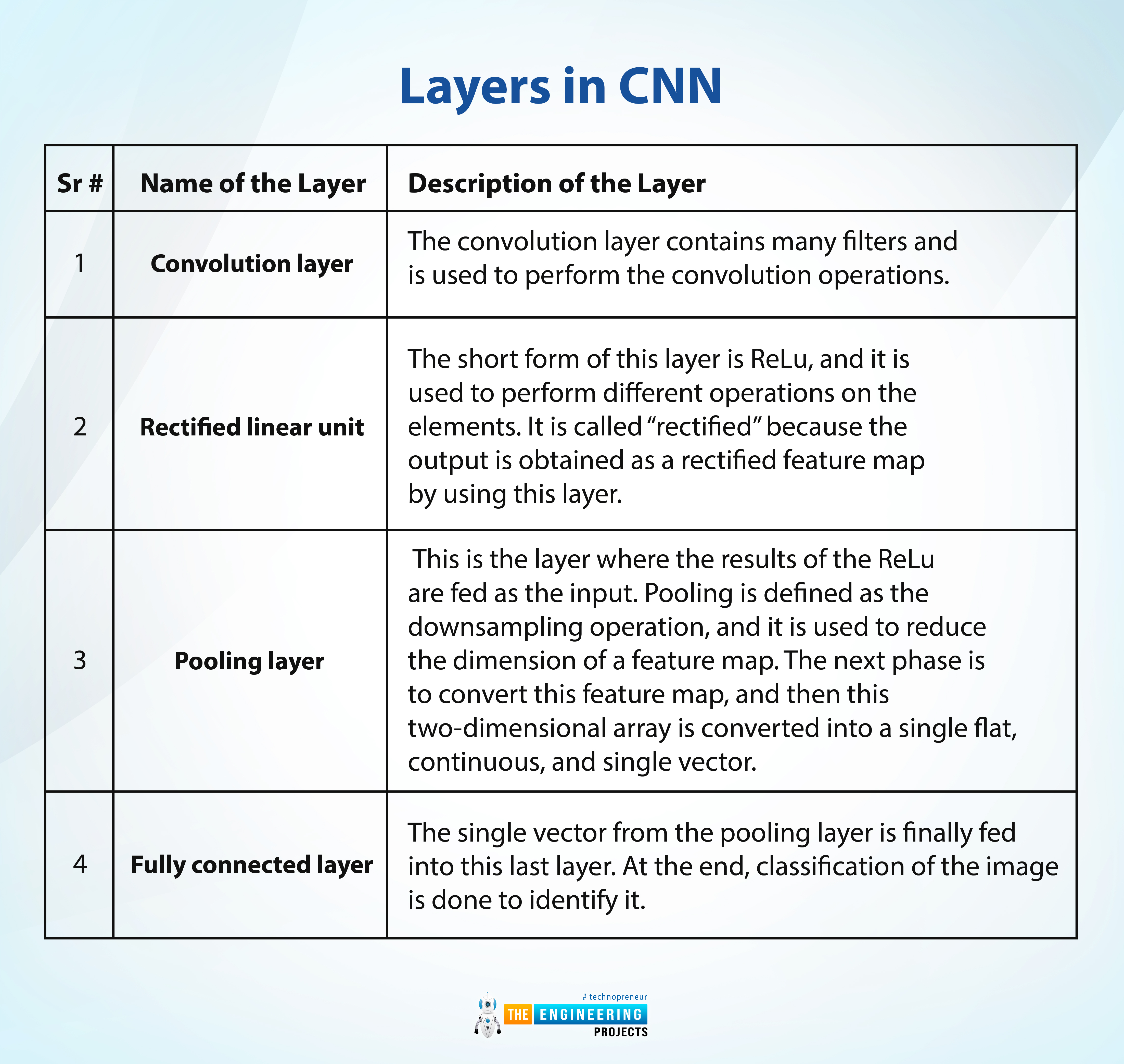

Layers in CNN

We know that neural networks have many layers, and similar is the case with CNN. We observe different layers in this type of network, and these are described below:

Sr # |

Name of the Layer |

Description of the Layer |

1 |

Convolution layer |

The convolution layer contains many filters and is used to perform the convolution operations. |

2 |

Rectified linear unit |

The short form of this layer is ReLu, and it is used to perform different operations on the elements. It is called “rectified” because the output is obtained as a rectified feature map by using this layer. |

3 |

Pooling layer |

This is the layer where the results of the ReLu are fed as the input. Pooling is defined as the downsampling operation, and it is used to reduce the dimension of a feature map. The next phase is to convert this feature map, and then this two-dimensional array is converted into a single flat, continuous, and single vector. |

4 |

Fully connected layer |

The single vector from the pooling layer is finally fed into this last layer. At the end, classification of the image is done to identify it. |

As a reminder, you must know that neural networks have many layers, and the output of one layer becomes the input for the next layer. In this way, we get refined and better results in every layer.

Long Short-Term Memory Networks (LSTMs)

This is a type of RNN (recurrent neural network) with a good memory that is used by experts to remember long-term dependencies. By default, it has the ability to recall past information over a long period of time. Because of this ability, LSTMs are used in time series prediction. It is not a single layer but a combination of four layers that communicate with each other in a unique way. Some very typical uses of LSTM are given below:

Speech recognition

Development in pharmaceutical operations

Different compositions in music

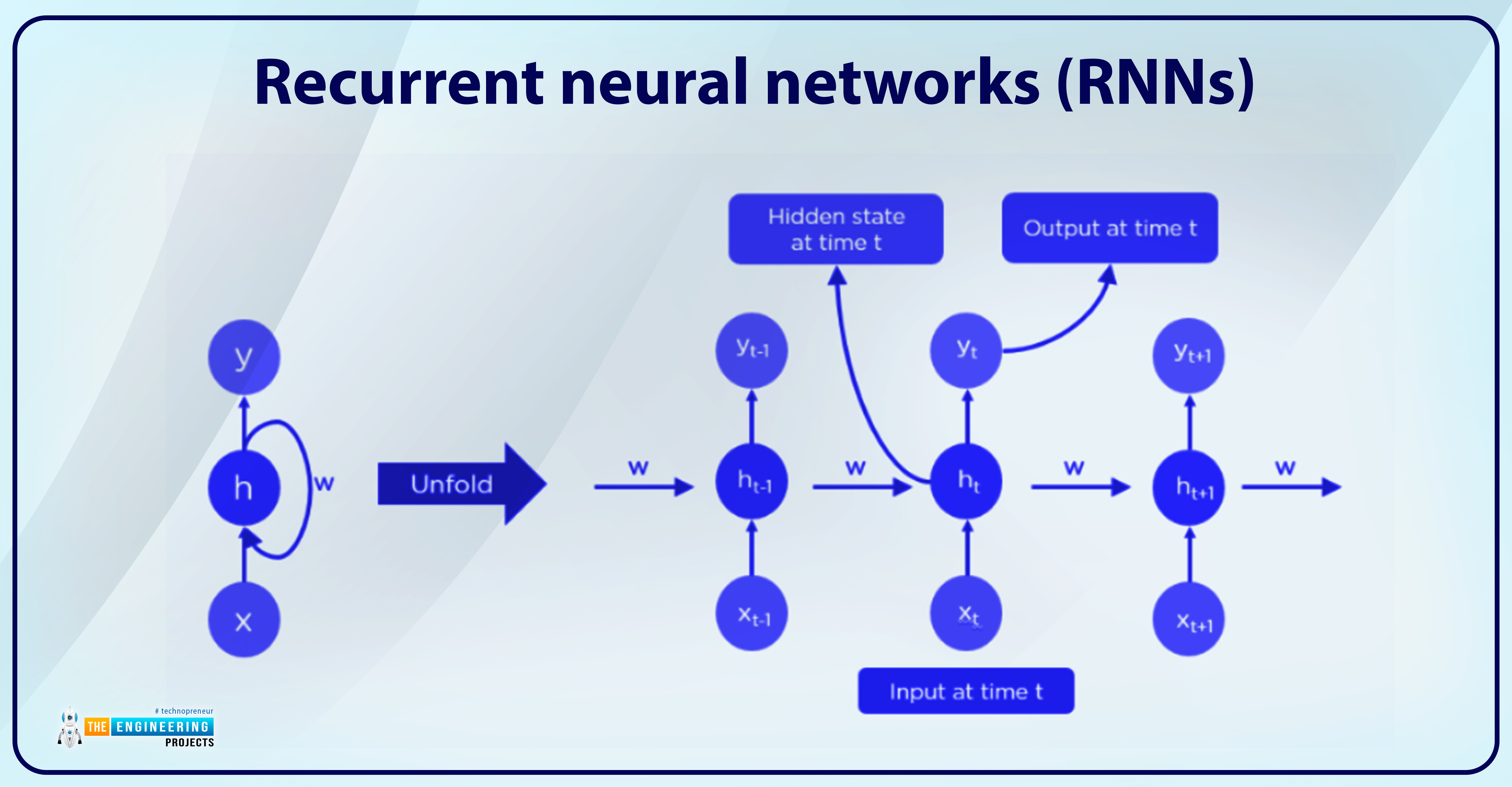

Recurrent neural networks (RNNs)

If you are familiar with the fundamentals of programming, you will understand that if we want to repeat a process, loops, or recurrent processes, are the solution. Similarly, the recurrent neural network is the one that forms the directed cycles. The unique thing about it is that the output of the LSTM becomes the input of the RNN. It means these are connected in a sequence, and in this way, the current phase becomes the output of the LSTM.

The main reason why this connection is magical is that you can utilize the feature of memory storage in LSTM and the ability of RNNs to work in a cyclic way. Some uses of RNN are given next:

Recognition of handwriting

Time series analysis

Translation by the machine

Natural language processing

captioning the images

Working of RNN

The output of the RNN is obtained by following the equation given next:

If

output=t-1

Then

input=1

So at the output t

input=1+1

And this series goes on

Moreover, RNN can be used with any length of the input, but the size of the model does not increase when the input size is increased.

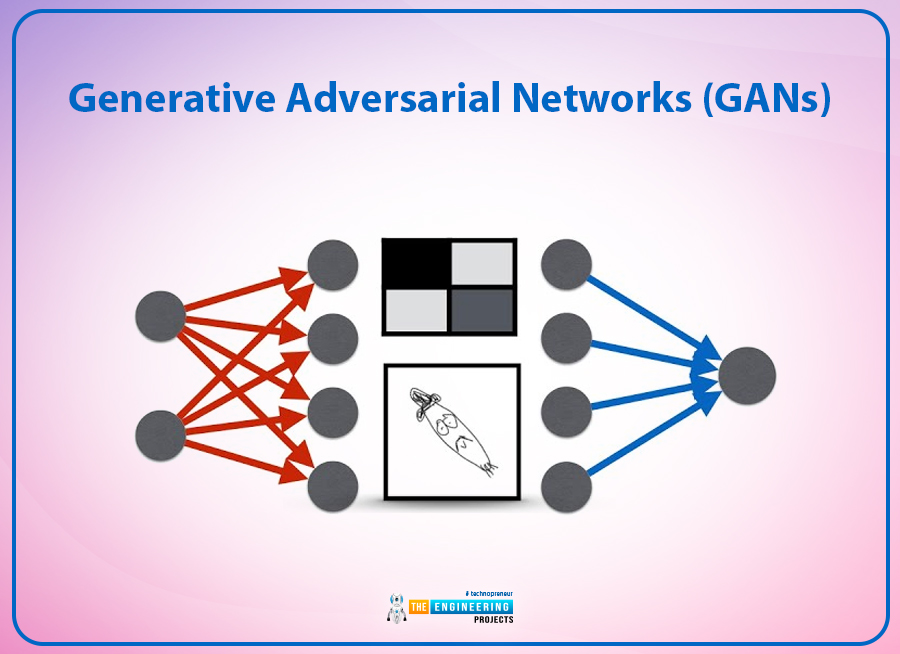

Generative Adversarial Networks (GANs)

Next on the list is the GAN or the generative adversarial network. These are known as “adversarial networks" because they use two networks that compete with each other to generate real-time synthesized data. It is one of the major reasons why we found applications of the generative adversarial network in video, image, and voice generation.

GANs were first described in a paper published in 2014 by Ian Goodfellow and other researchers at the University of Montreal, including Yoshua Bengio. Yann LeCun, Facebook's AI research director, referred to GANs as "the most interesting idea in ML in the last 10 years." This made GANs a popular and interesting neural network. Another reason why I like this network is the fantastic feature of mimicking. You can create music, voice, video, or any related application that is difficult to recognize as being made by a machine. The impressive results are making this network more and more popular every day, but the evil of this network is equal. As with all technologies, people can use them for negative purposes, so check and balance are applied to such techniques. Moreover, GAN can generate realistic images and cartoons with high-quality results and render 3D objects.

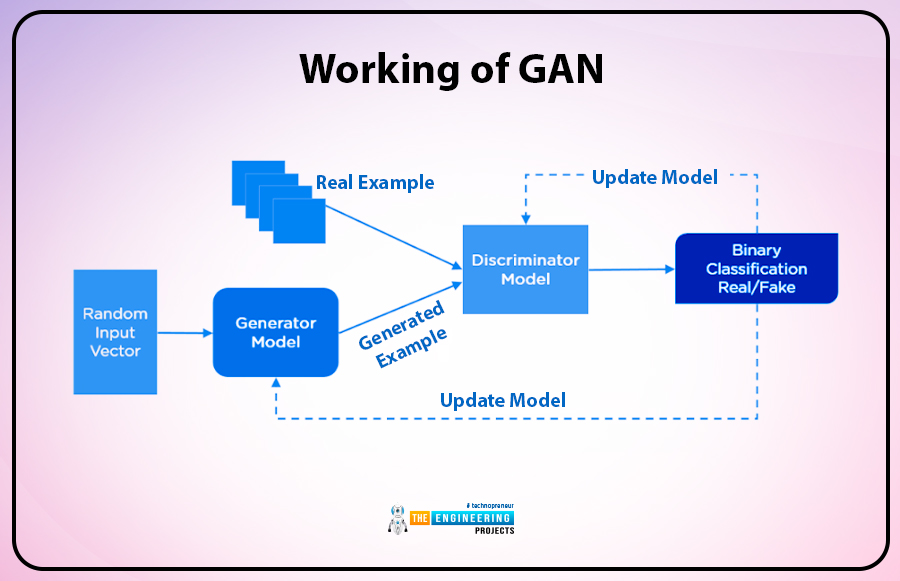

Working of GAN

At first, the network learns to distinguish between the generated fake data and sampled data. It happens when fake data is produced and the discriminator learns to recognise if it is real or false. After that, GAN is responsible to send the results to the generator so that it can learn and memorize the results and go for further training.

If it seems a simple and easy task, then think again because the recognition part is a tough job and you have to feed the perfect data in the perfect way so you may have accurate results every time.

Radial Basis Function Networks (RBFNs)

For the problems in the function approximation, we use an artificial intelligence technique called the radial basis function network. It is somehow a little bit different from the previous ones. These are the types of forward-feed neural networks, and the speed and performance of these networks make them better than the other neural networks. These are highly efficient and have a better learning speed than others, but they require experience and hard work. Another reason to call them better is the presence of only one hidden layer and one radial basis function that is used as an activation function. Keep in mind that the activation function is highly efficient in its approximation of the results.

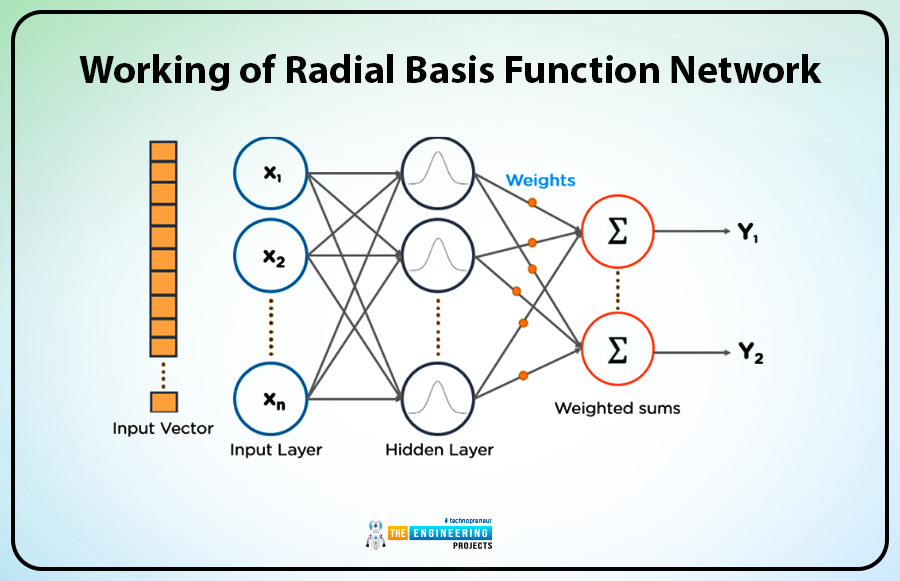

Working of Radial Basis Function Network

It takes the data from a training set and measures the similarities in the input. In this way, it classifies the data.

In the layer of RBF neurons, the input vector is then fed into the input layer.

After finding the weighted sum of the inputs, we obtain the output. Each category or class of data has one node.

The difference from the other network is, the neurons contain a gaussian transfer function, and the output is inversely proportional to the distance between the centre of the network and the neuron.

In the end, we get the output, which is a combination of both, the input of the radial basis function and the neuron parameters.

So, it seems that these networks are enough for today. Although there are different types of neural networks as well, as we have said earlier, with the advancement in deep learning, more and more algorithms for the neural networks are being introduced that have their own specifications, yet at this level, we just wanted to give you an idea about the neural networks. At the start of this article, we have seen what deep learning algorithms are and how they are different from other types of algorithms. We have seen the types of neural networks that include CNNs, LSTMNs, RNNs, GANs, and RBFs.

Deep Learning

Deep Learning ayeshayounas

ayeshayounas 0 Comments

0 Comments

2.3k

2.3k

953

953

921

921

2.1K

2.1K