Hi learners! I hope you are having a good day. In the previous lecture, we saw Kohonen’s neural network, which is a modern type of neural network. We know that modern neural networks are playing a crucial role in maintaining the workings of multiple industries at a higher level. Today we are talking about another neural network named EfficientNet. It is not only a single neural network but a set of different networks that work alike and have the same principles but have their own specialized workings as well.

EfficentNet is providing groundbreaking innovations in the complex fields of deep learning and computer vision. It makes these fields more accessible and, therefore, enhances their range of practical applications. We will start with the introduction, and then we will share some useful information about the structure of this neural network. So let’s start learning.

Introduction to EfficientNet Neural Network

EfficientNet is a family of neural networks that are part of CNN's architecture, but it has some of the latest and even better functionalities that help users achieve state-of-the-art efficiency.

The efficientNet was introduced in 2019 in a research paper with the title “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks.” Mingxing Tan and Quoc V. Le introduced it, and this is now one of the most popular and latest neural networks. These are Google’s AI researchers, and the popularity of this neural network is due to its robust applications in multiple domains.

The motivation behind EfficentNet's development is the popularity of its parent neural network, CNN, which is an expensive and efficient network. The deployment of CNN in resource-constrained environments such as mobile devices was difficult, which led to the idea of an EfficentNet neural network.

Working of EfficentNet

The EffcinetNet has a relatively simpler working model than the CNN to provide efficiency and accuracy. The basic principle of working is the same as in CNN, but EfficeintNet archives better goals because of the scaleable calculations. The convolution of the dataset allows EffcientNet to perform complicated calculations more efficiently. This helps EffcientNet a lot in the processing of images and complex data, and as a result, this neural network is one of the most suitable choices for fields like computer vision and image processing.

Members of the EfficientNet Family

As we have mentioned earlier, EffcientNet is not a single neural network but a family. Each neural network has the same architecture but is slightly different because of the different working methods. Some parameters are important to understand before knowing the difference between these members:

FLOPs in EffcientNet

When the topic is a neural network, the FLOPs denote the number of floating points per second a neural network can perform. It means the total number of billions of floating point operations an EffcinetNet member can perform.

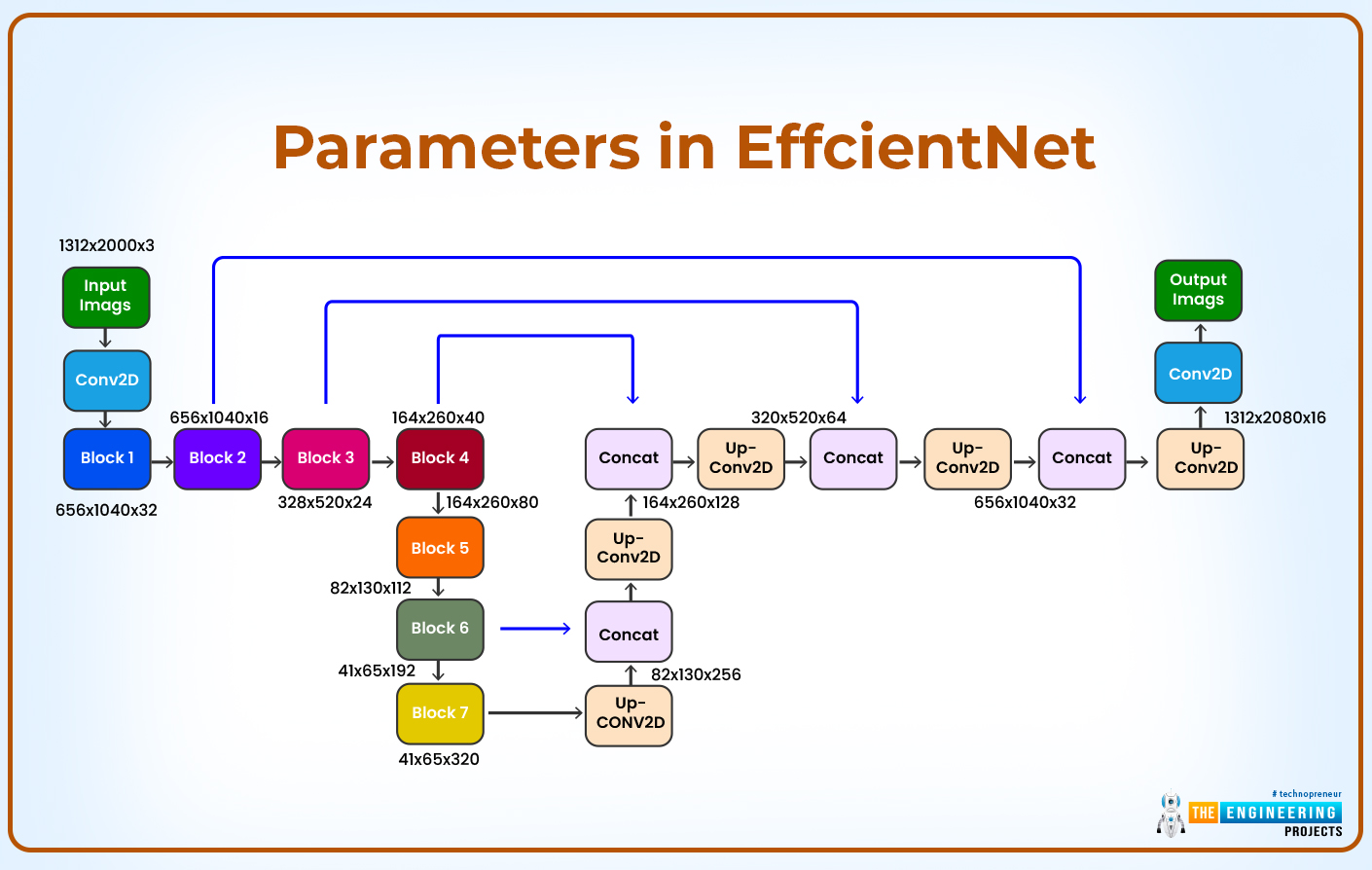

Parameters in EffcientNet

The parameters define the number of weights and biases that the neural network can learn during the training process. These are usually represented in millions of numbers, and the user must understand that the 5.3 parameter means the particular member can learn 5.3 million parameters it can train.

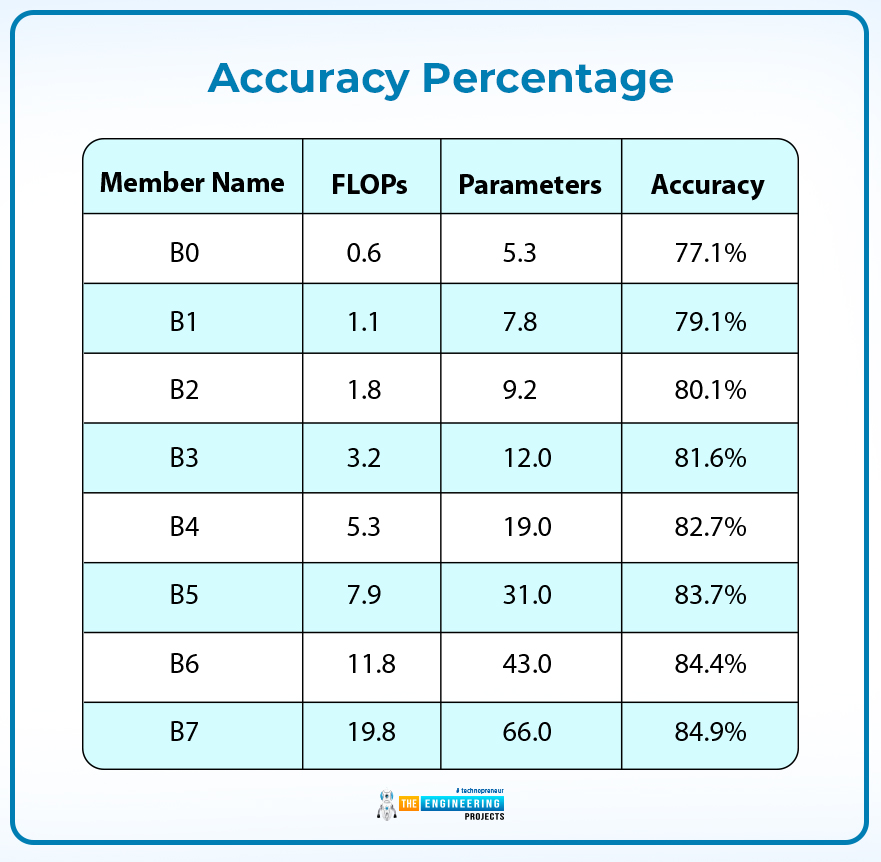

Accuracy Percentage

Accuracy is the most basic and important parameter to check the performance of a neural network. The EffecntNet family varies in accuracy, and users have to choose the best one according to the requirements of the task.

Different family members of EffcientNet are indicated by numbers in the name, and each member has a slightly larger size than the previous one. As a result, accuracy and performance are enhanced. Here is the table that will show you the difference among these:

| Member Name | FLOPs | Parameters | Accuracy |

B0 |

0.6 |

5.3 |

77.1% |

B1 |

1.1 |

7.8 |

79.1% |

B2 |

1.8 |

9.2 |

80.1% |

B3 |

3.2 |

12.0 |

81.6% |

B4 |

5.3 |

19.0 |

82.7% |

B5 |

7.9 |

31.0 |

83.7% |

B6 |

11.8 |

43.0 |

84.4% |

B7 |

19.8 |

66.0 |

84.9% |

This table shows the trade-off between different parameters of EffcientNet models, and it shows that a larger size (increased cost) can be more useful and accurate, and vice versa. These eight members are best for particular types of tasks, and while choosing the best one for the particular task, some other kinds of research are also important.

Features of EffcientNet

The workings and structure of every family member of EffcientNet are alike. Therefore, here is a simple and general overview of the features of EffcientNet. This will show the workings and advantages of the EfficientNet neural network.

Compound Scaling

One of the most significant features of this family is the compound scaling, which is different from other options for neural networks. It has the power to maintain the balance between the following features of the network:

- Depth of network (number of layers)

- Width of the network (number of channels or neurons in each layer)

- Input image resolution

As a result, the EfficientNet network does not require additional computation and provides better performance.

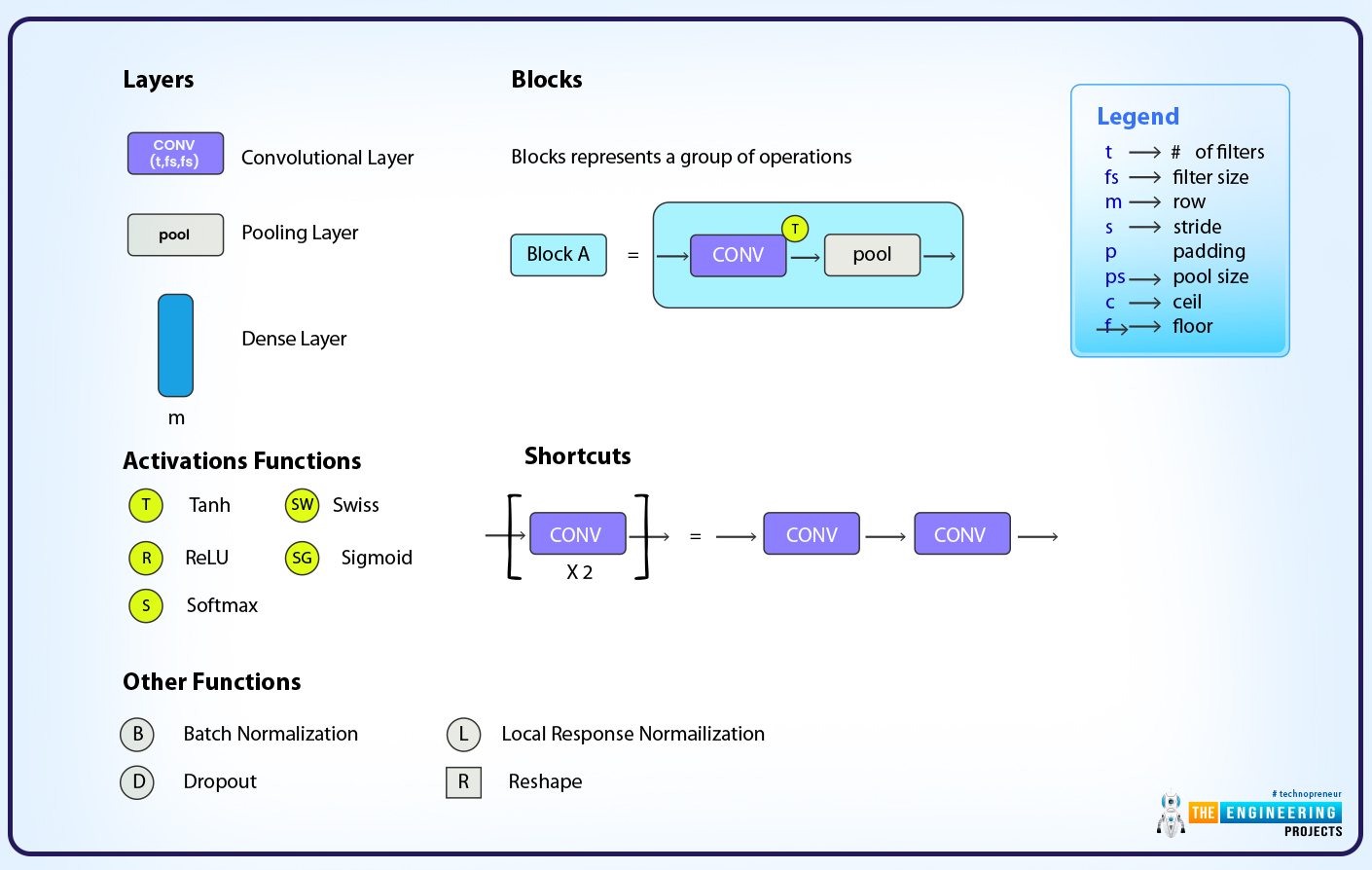

Depthwise Convolutions

A difference between the traditional CNN and EffientNet neural networks is the depthwise separable convolutions. As a result, the complexity of this network is less than CNN's. All the channels use a separate convolutional kernel; therefore, depthwise separate convolutions are applied to the channels.

The resultant image is then passed through a pointwise convolution. Here, the outputs of the depthwise convolution channel are combined into a single channel. The standard convolution requires a great deal of data, but this technique requires a smaller number of parameters and significantly reduces the complexity.

Mobile inverted bottleneck convolution (MBConv)

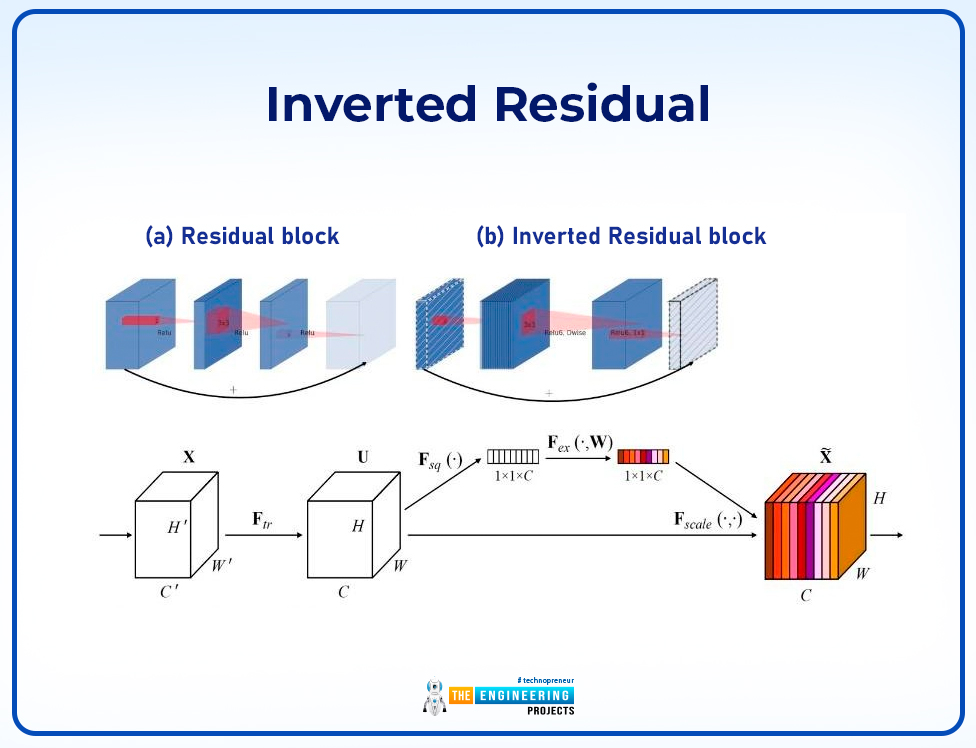

The EffcientNet family uses a different and more recent type of convolution known as MBConv. It has a better design than the traditional convolution. The depthwise convolutions and pointwise linear convolutions can be done simultaneously. It is useful in reducing floating-point operations for overall performance. The two key features of this architecture are:

- Inverted Bottleneck

- Inverted residual

Here is a simple introduction to both:

Inverted Bottleneck

The inverted bottleneck has three main convolutional layers:

- Pointwise Convolution (1x1 Conv) reduces the computational cost by reducing the number of input channels. It may seem more time taking but the results are outstanding.

- Depthwise Convolution (3x3 DWConv) reduces the computation further because it applies a separate computation for every input channel.

- Pointwise Convolution (1x1 Conv) is then responsible for expanding the number of channels back to its original form.

Inverted Residual

This is applied during the computation of the inverted bottleneck. This adds the shortcut connection around the inverted bottleneck, and as a result, the inverted residual blocks are formed. This is important because it helps reduce the loss of information when convolution is applied to the data.

Squeeze and Excite Block

The representational power of EffcientNet can be enhanced by using an architecture called Squeeze and Excite, or SE. It is not a particular or specialized architecture for EfficinetNet but is a separate block that can be incorporated into EfficentNet. The reason to introduce it here is to show that different architectures can be applied to EfficnetNet to enhance efficiency and performance.

Flexibility in EfficentNet

The efficeintNet is a family, and therefore, it has multiple sets of workings out of which, the user can choose the most accurate. The eight members of this series (E0 to E7) are ideal for particular tasks; therefore, these provide the options for the user to get the best matching performance. All of these provide a different type of combination of accuracy and size, and therefore, more users are attracted to them.

Hence, this was all about EffientNet, and we have understood all the basic features of this neural network. The EffenctNet is a set of neural networks that are different from each other in accuracy and size, but their workings and structures are similar.

EffcientNet was developed by the Google AI Research team, and the inspiration was CNN. These are considered the lightweight version of the convolutional networks and provide better performance because of the compound scaling and depthwise convolutions. I hope it was helpful for you and if you want to know more about modern neural networks then stay with us because we will talk about these in the coming lectures.

Deep Learning

Deep Learning ayeshayounas

ayeshayounas 0 Comments

0 Comments

2.3k

2.3k

953

953

921

921

2.1K

2.1K